Overview

Digital Art Glove is an integrated sensor glove that controls a digital canvas. Operating at the intersection of art and technology, the purpose of the glove is to make digital creativity more accessible and fun!

Team Members: Mika Nogami, Beatrice Tam, Josiann Zhou

Technologies:

Software: OpenCV, MediaPipe Hands, ReactJS, Python, NextJS, p5.js

Hardware/Mech: SolidWorks, 3D Printing, Sensors (Flex, Force, IMU), ESP32

Role: Mechanical Prototyper, Graphic Designer, Developer of Freeform Mode in p5.js

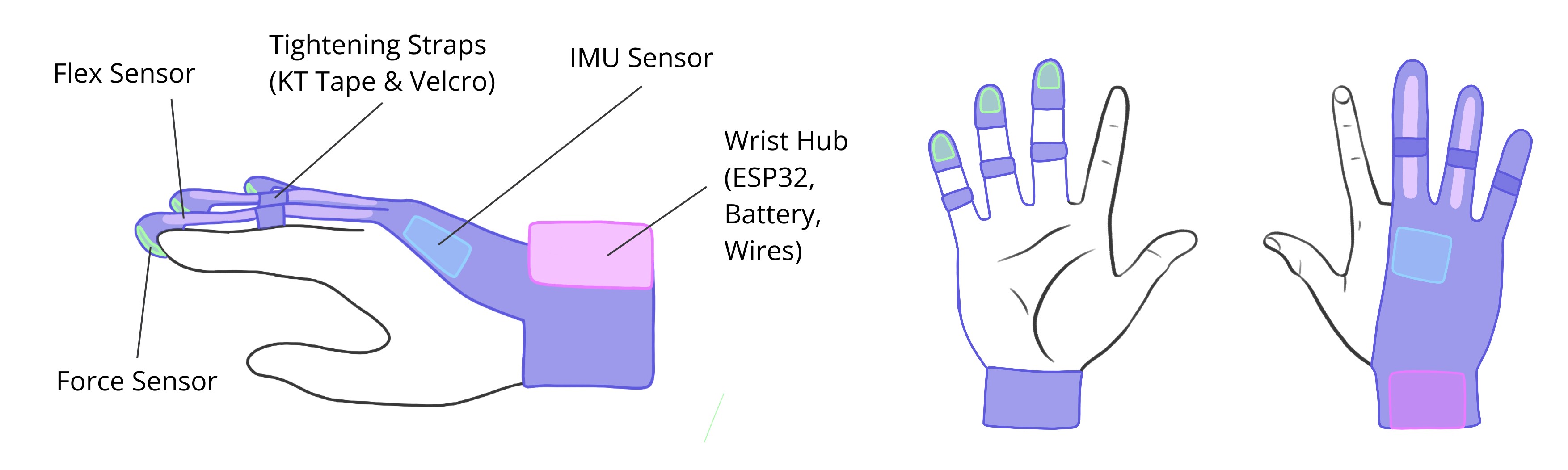

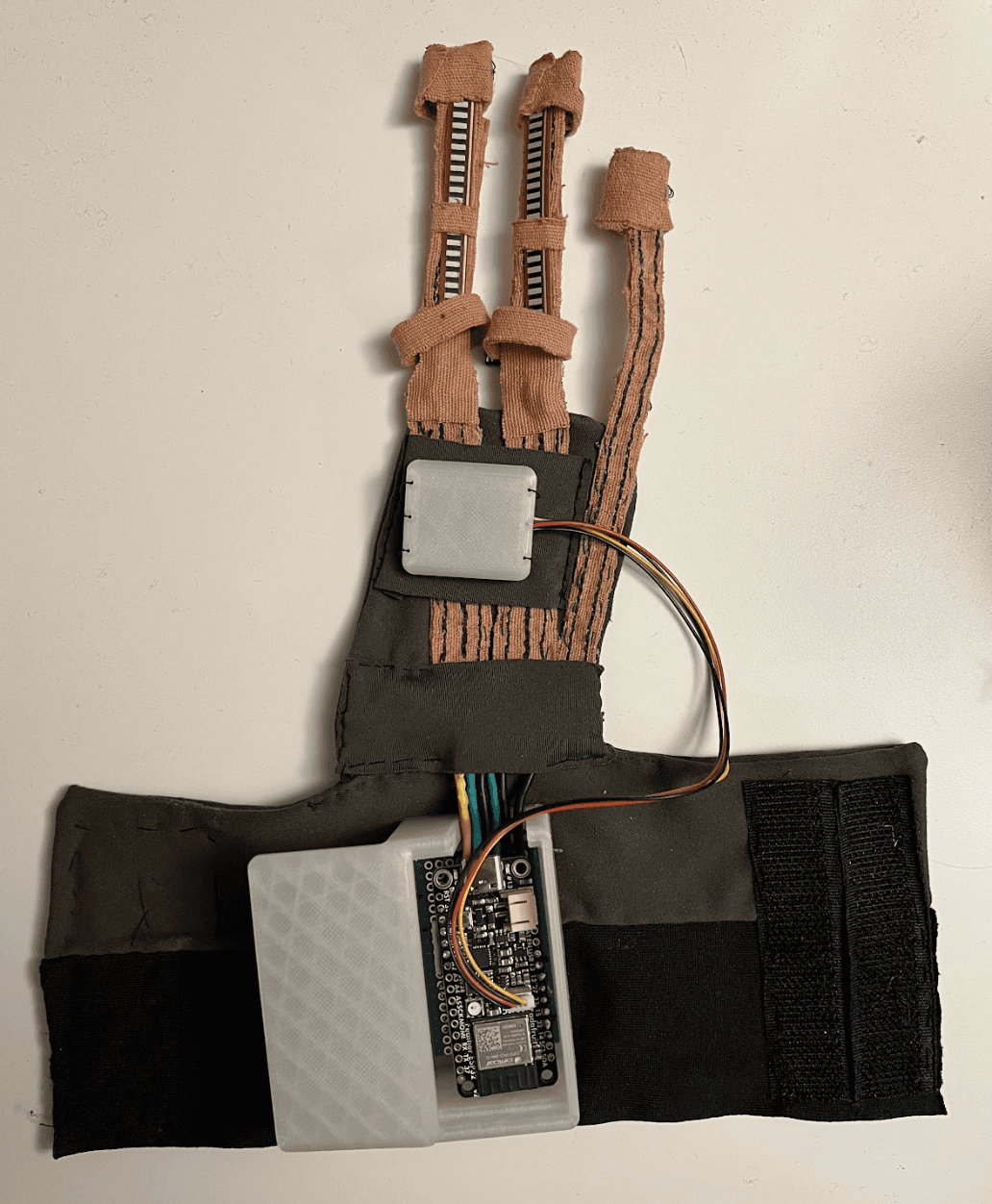

Sensors

The glove design features flex sensors on the middle and ring fingers, as well as force sensors on the middle, ring and pinky fingers. Additionally, an IMU sensor will be on the top of the hand near the index finger knuckle, as this spot tends to remain still during finger flexion. The main hub containing the ESP32, wires and battery is located on the wrist.

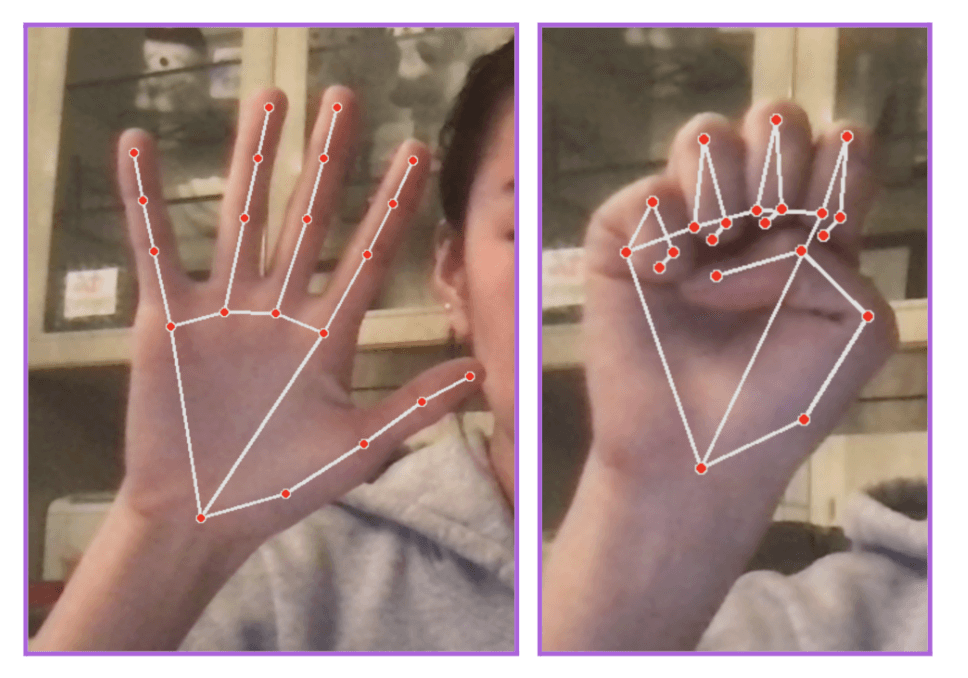

MediaPipe Hands

MediaPipe Hands is a framework that helps users implement machine learning tasks as a pipeline, which is essential as our system is continuously capturing data through video.

The MediaPipe Hands pipeline, consists of two convolutional neural network models:

Single-Shot Detector (SSD) which does palm detection

Regression Model that predicts the 21 hand landmarks

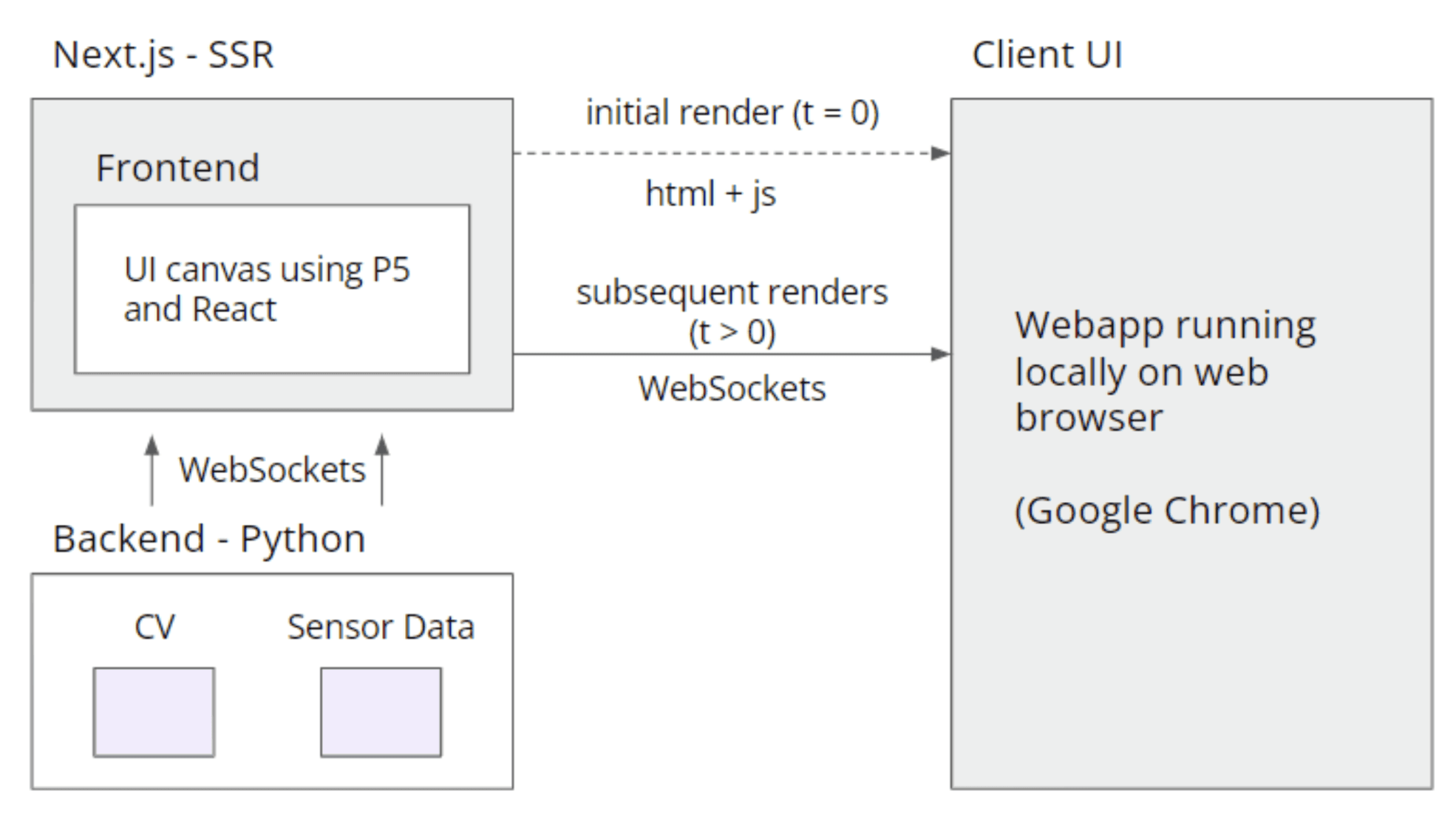

The position data is sent from the backend to the frontend using Websockets.

Digital Canvas

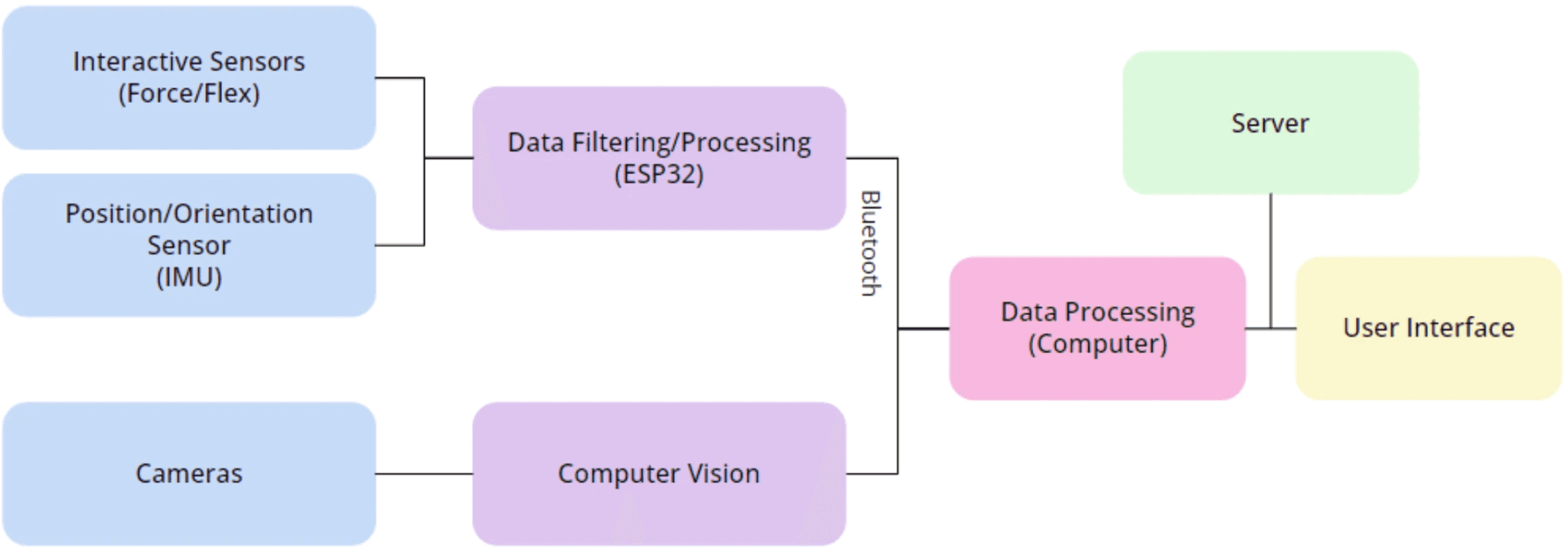

Our system includes the glove, camera and user interface, with four processing tasks.

Collecting Sensor Data

ESP32 sensor data (flex, force, IMU) is sent to the backend using built-in Bluetooth module

Running Computer Vision Program

External ArduCam module records user's hand and prints data to computer to be processed

Backend Data Processing

Data undergoes two subprocesses to improve line rendering

Displaying UI

User interface is displayed on a local browser, created with NextJS and ReactJS. React-p5 is used to build the canvas

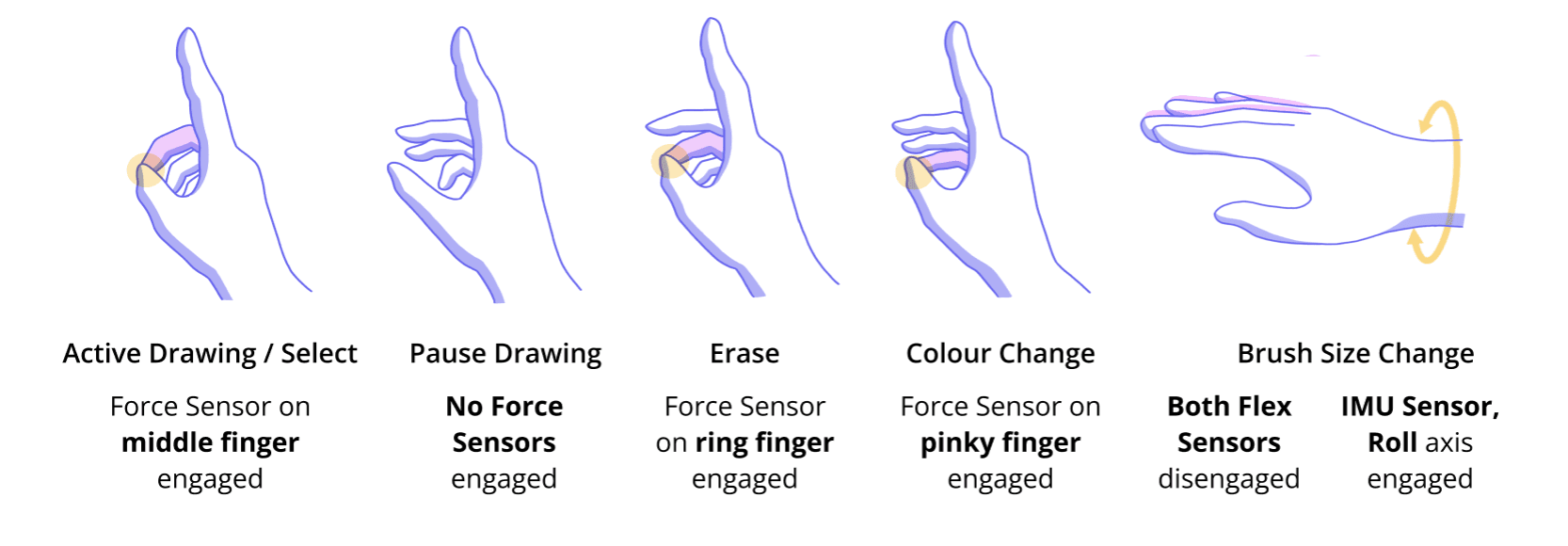

Gestures

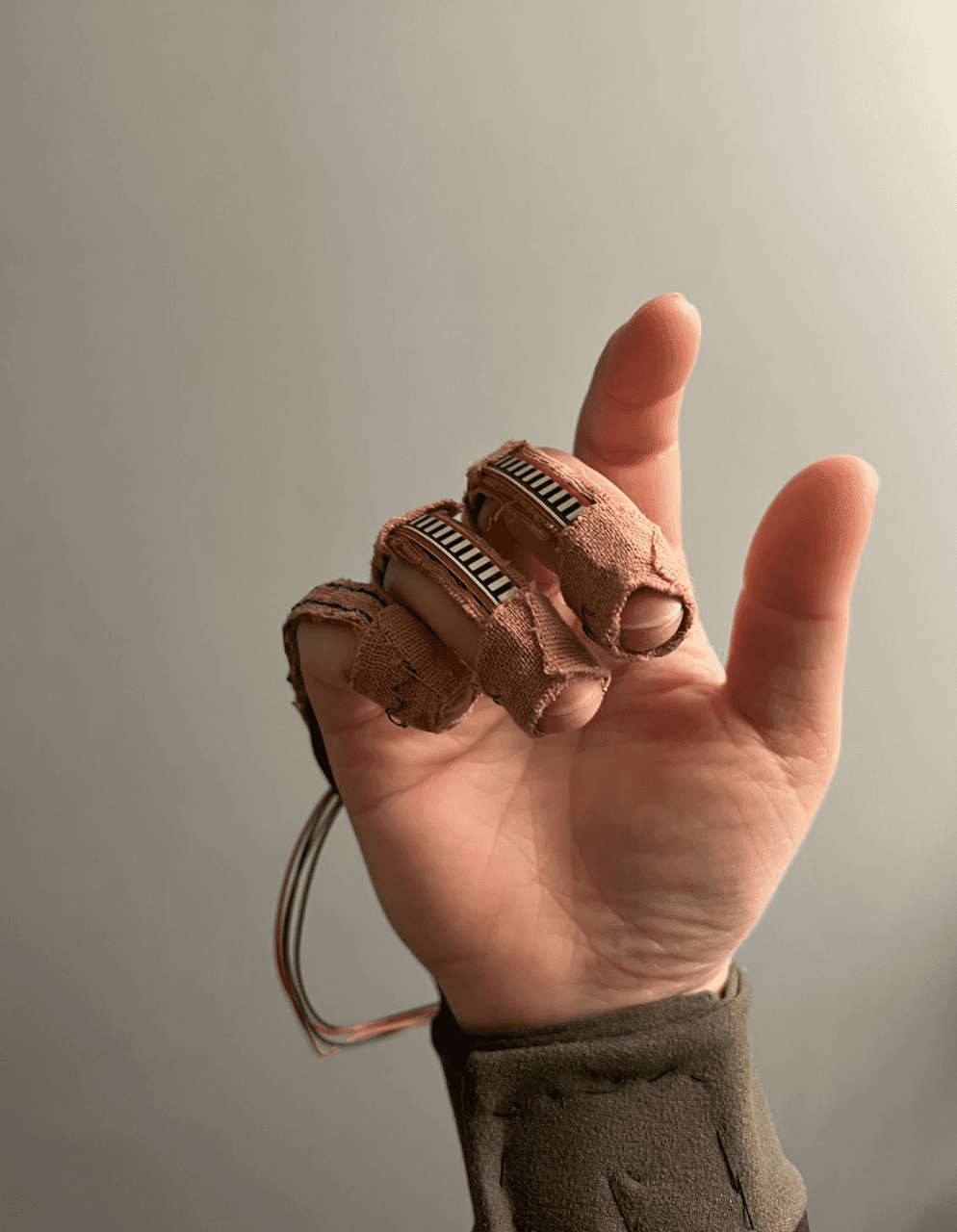

The force, flex and IMU sensors are programmed to represent different functions, so the user can control the interface solely with the glove. The index finger should remain pointed upwards so the tip can be detected by the computer vision program. The thumb toggles through the functions by interacting with the force sensors located on the middle, ring and pinky finger. The IMU sensor is used in conjunction with the flex sensors to change the brush size. If both flex sensors are disengaged, meaning the fingers are extended, the IMU collects data in wrist tilt to change brush size. By bending those same fingers, the brush size is locked in.

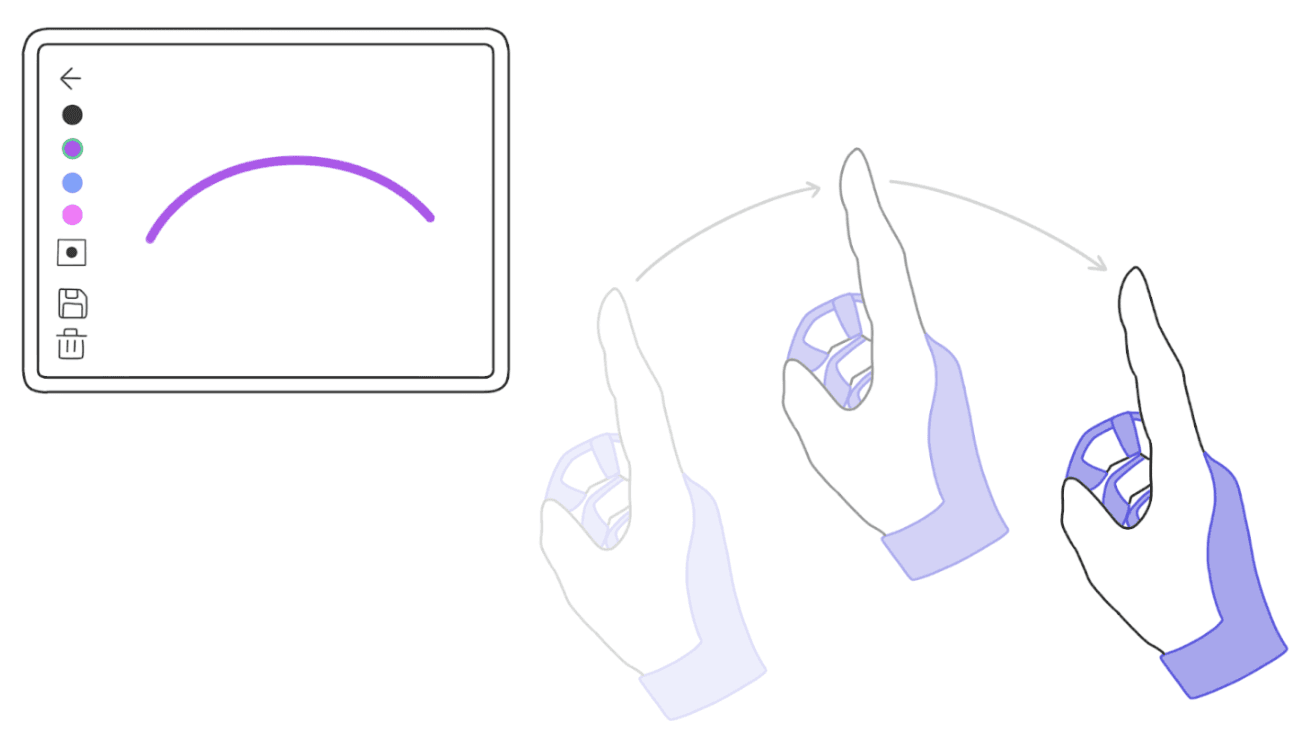

Mode 1: Gesture Painting

The user interface prompts the user to select between two options: gesture painting and freeform art. The user can toggle through different colours using the glove, and the selected colour is highlighted on the interface. Similarly, the brush size is also displayed. The user can save or clear the drawing by hovering over the respective icons, and using the 'active drawing/select' gesture.

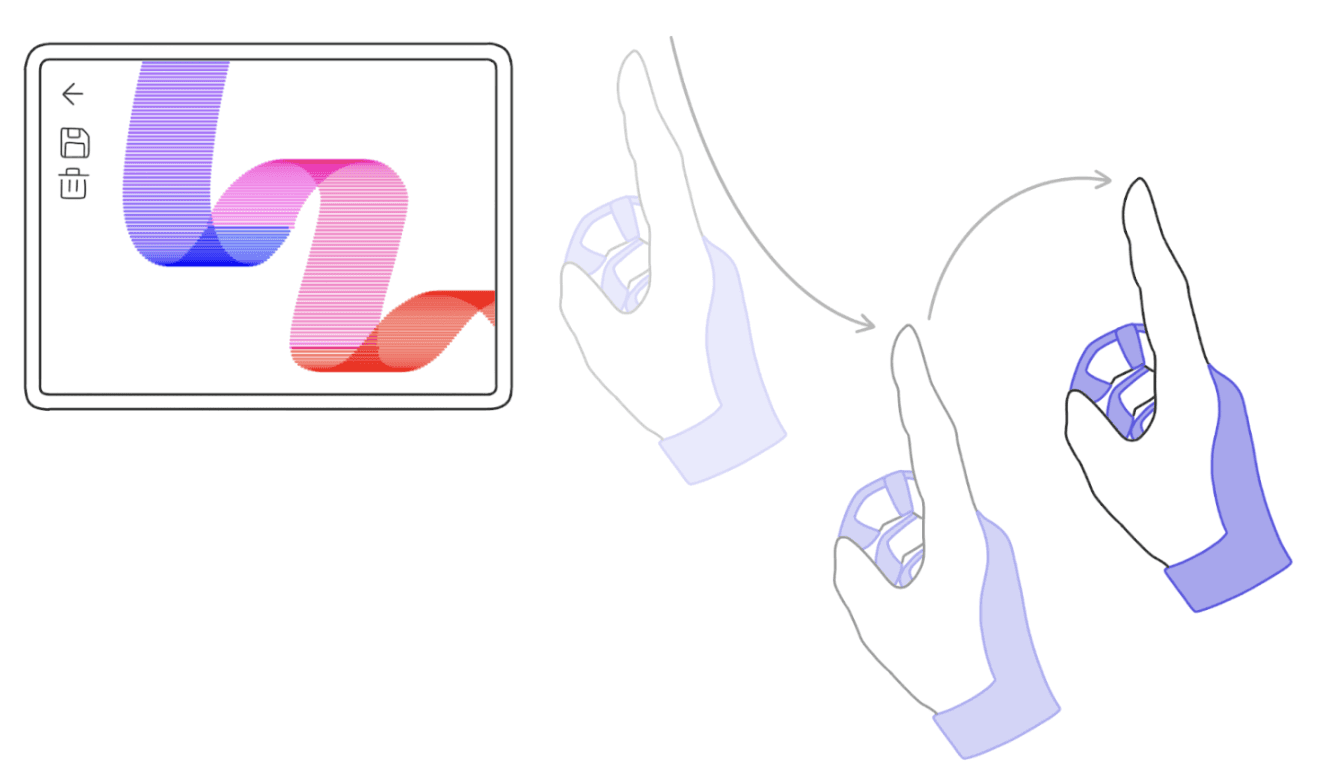

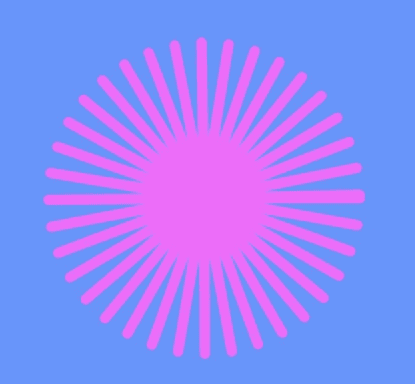

Mode 2: Freeform Art

The p5.js library includes many functions to create shape elements such as lines, ellipses, and rectangles, as well as functions to alter and animate these forms. From the basic functions, we created custom functions to design the freeform mode of the glove application.

We created the prototype with three factors in mind: adjustability, robustness and comfort of the user. At UBC Applied Science's Design and Innovation Day, the glove would be showcased and demoed with users with various hand sizes. With multiple people taking the glove on and off, it was essential for the device to be robust enough so the components wouldn't get damaged and the wiring stayed intact.

The 'skeleton' prototype design was fabricated using kinesiology tape, spandex/elastane fabric and velcro to secure onto the hand and wrist. While our team originally imagined a glove that would cover the entire hand/finger, a completely covered hand is very difficult for the computer vision program to track.

Connecting the wiring along the fingers posed a challenge as there was not much surface area for the wires to attach to. The flex and force sensors each have two connections, meaning the middle and ring finger had four wires total. Another challenge was the flexion of the fingers causing tension in the wires. To adapt to this, we used conductive thread sewn into the KT tape using a triple stretch stitch.

The conductive thread was soldered onto wires connected to the ESP32. The IMU bracket and main hub containing the battery and ESP32 were 3D printed using PLA filament.

Design and Innovation Day was a success, as many students and faculty in UBC Engineering, as well as community members, were able to try the glove and its functions. We received a lot of positive feedback and there was an overall excitement about the project.

As an integrated mechanical-electrical-software unit, there are many technologies this could be applied to. By changing the outputs of the sensors from visual to audio, the glove could be used as an instrument. The sensor aspect could also be programmed and used in environments like physical therapy for interactive exercises. Our team plans to continue with the project!